GlobEnc: Quantifying Global Token Attribution by Incorporating the Whole Encoder Layer in Transformers

Published:

NAACL 2022

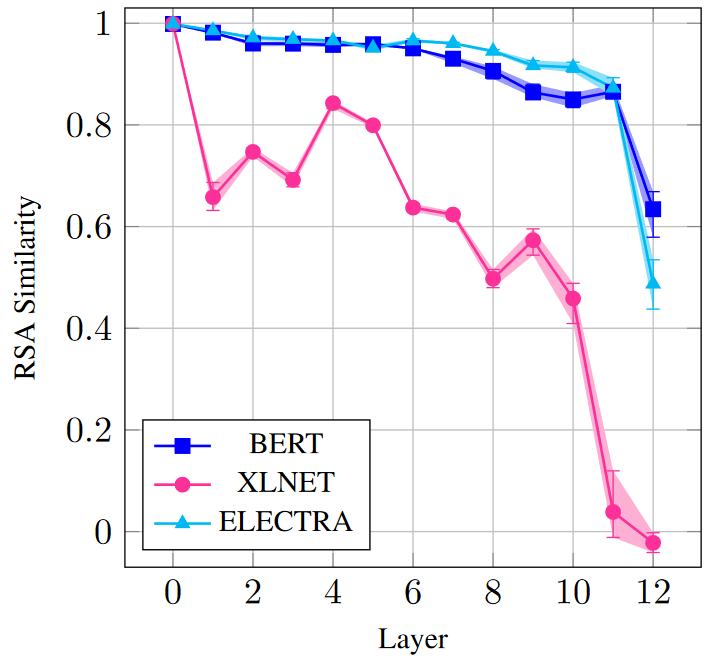

- We expand the scope of analysis from attention block in Transformers to the whole encoder.

- Our method significantly improves over existing techniques for quantifying global token attributions.

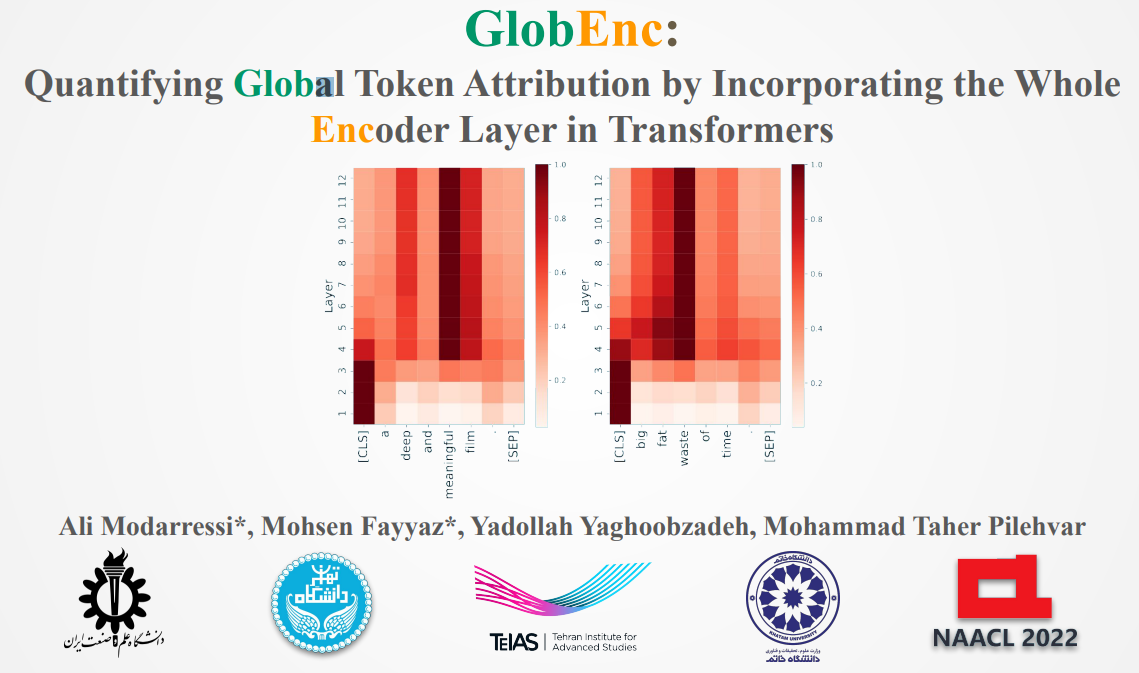

- We qualitatively demonstrate that the attributions obtained by our method are plausibly interpretable.

read more read paper

Want to know more? read paper